I had the opportunity to revisit Wiliams’ 2018 book, Embedded Formative Assessment, while looking for definitions of “formative assessment.” The first two chapters are simply priceless. Beyond those, the other chapters include a general problem to be solved, and then practical techniques on solving them. Here’s an overview of what I consider the best parts…

Continue readingfeedback

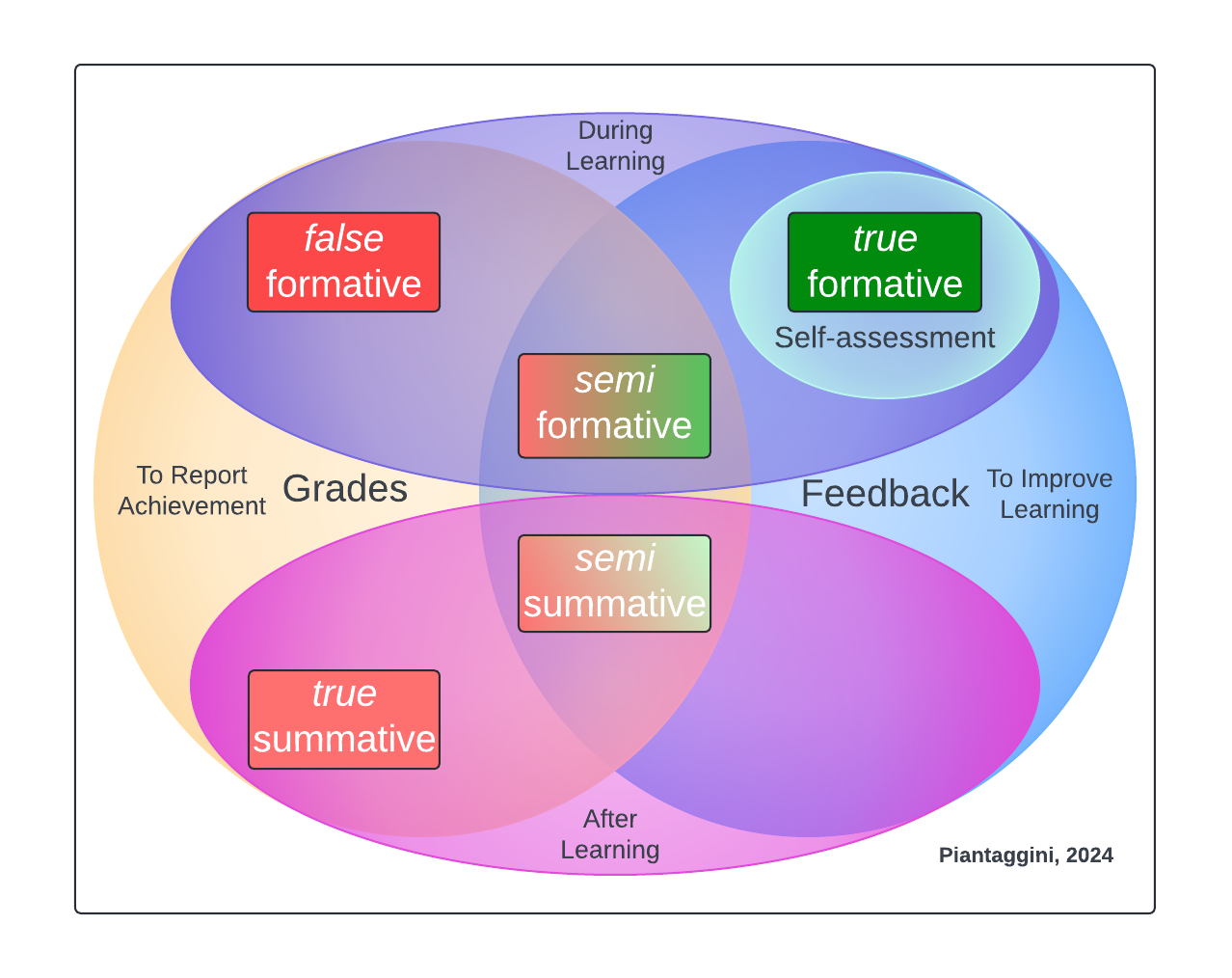

False Formatives

I just presented a poster session in Chicago for the NCME Special Conference on Classroom Assessment (Piantaggini, 2024). While I had some rough details for a proposed dissertation study, the focus of discussion with scholars who stopped by was my new assessment model and the theoretical framework that brought me to it. The message I got was “I think you’re onto something,” so I’m sharing my work here to get more eyes on it. Please contact me with any embarrassingly scathing criticism. Otherwise, reply publicly with any other thoughts or questions. After all, this is my blog, not peer review!

So, in this blog post, I’ll describe the model you see above, and how I got there, starting with a major dilemma I identified when reviewing literature on classroom assessment: confusion over grading formative assessments…

Continue readingCurrent Reading: Formative Assessment

I’m going to start sharing some findings in a series called “Current Reading” as part of a lit review I’m doing on assessment and grading; nothing too fancy or cerebral, but definitely more than blog post ideas.

Why the announcement?!

On the one hand, this is not new. I’ve shared plenty of direct quotes and sources in my blog posts in the past. Also, consider this a symptom of being steeped in academia once again. I’m reading hundreds of pages of research a week, and it’s important to digest and keep track of studies that support my own research. This includes knowing who wrote about what, and when. On the other hand, a second language acquisition (SLA) researcher Bill VanPatten mentioned something online recently when I shared a 2020 post with a summary of CI non-examples. His comment was how ideas in that post were oddly familiar ones throughout the field. That’s completely true; I never claimed they were *my* original thoughts. Like many of my posts written to pass along information, that 2020 summary doesn’t include citations to any particular study. It’s a collection of ideas that have consensus in the SLA community, and that lack of citations was intentional, not an oversight.

Why intentional? For nearly all of my blog’s 12 year history, I never wrote for the academic community that would be interested in that kind of stuff. I was writing for other teachers. I sometimes added just a shorthand author and year (e.g., Feldman, 2018) to some statements that would give most people what they needed to track down the original—if they really wanted to read that original! In my experience, though, most teachers don’t read research, so I haven’t bothered much with bibliographies. Since I’m no longer teaching, and I’m now using bibliographies a lot more these days, I do want to make a clear distinction between posts of the past and posts moving forward. Granted, my posts are still actually written for teachers, make no mistake! My degree program is Teacher Education and School Improvement (TESI), and I’m still sharing ideas for practical implementation. The one difference is that they’ll now include more breadcrumbs for everyone to follow—myself included. After all, there has been no better way for me, personally, to consolidate thoughts and work through concepts than by writing these blog posts. You might also benefit as well. Now, for the good stuff…

Continue readingAre Points Really THAT Bad?! Yup.

It’s been a couple years since I first read Grading for Equity, attended its 3-day workshop, and wrote a series of posts the in winter of 2022 on grading practices that scholars have shown to be inequitable. One perplexing thing, though, is that I do find myself around teachers who implement those inequitable practices daily, yet by all observable accounts still have high levels of equity! I’m not suggesting that the scholars are wrong. I’m also not suggesting that teachers should continue using those practices. Instead, I want to revisit why points are so bad, and then consider what else is at play in the classroom that might keep things equitable (to a limited extent). Oh, and this post doesn’t get into ANYTHING about dealing with points from a teacher bookkeeping perspective, which is enough of a hassle on its own. Even the teacher who somehow hasn’t heard of the word “equity” would benefit from ditching points altogether.

Continue readingGrades: Going, Going, Gone!

Here’s a quick report having gone nearly 100% gradeless. I say nearly because at my school, the halfway point of the quarter (i.e., progress reports) requires a grade. So, as of right now there’s a course grade that shows up. This practice isn’t quite in line with a true ungrading approach that would have a grade only at the very end of the grading period. I’m nearly there, and have a feeling this is as far as I’ll go, too. But that’s not a problem. There’s already been a big difference in the most important areas, and I expect things to get even better.

Continue readingA Glossary Isn’t Enough & Replacing Comprehension Qs with Reflection Qs

After looking at various first day/week/month materials for the beginning language learner, I was reminded that most resources include texts with way too many words, way too soon. A full glossary certainly helps, but isn’t enough. Words need to be recycled often—especially in the beginning—to have a chance of being acquired by all learners (not just the ones with an excellent memory). If your text doesn’t recycle its vocab, you should adapt it. Remember, for a text to be truly readable (i.e. without starting to become laborious), students must understand 98 words in a text with 100 different ones, 49 words in a text with 50, and pretty much every word in a text of 25 (Hsueh-Chao & Nation, 2000).

A full glossary is as close to cueing as we can get asynchronously, but we won’t know how students are using it. As part of evidence of engagement when reading a text, these Google Form reflection questions could shed light on that:

ex.

- How often did you look up meaning of words?

– Hella

– A lot

– Sometimes

– Not very much - What was your experience of looking up words?

– No problem at all

(i.e. it helped you read, or you didn’t mind looking up words)

– It was OK

(i.e. a little annoying looking up words, but not too bad)

– It started getting hard to read

(i.e. looking up words started feeling like “work”)

– I kept looking at almost every word, so “reading” was really hard to do

(i.e. this was a bad reading experience) - Would you like Mr P to give you easier & shorter texts to read?

– Yes

– No

The first two tell us a student’s threshold for “noise” (i.e. how much incomprehension in the input they can handle), but the last question is going to be extra important. If a lot of students opt for “yes,” we can put effort into making easier texts for all (e.g. an additional simplified tier). Alternatively, we could reach out to a few individuals with support.

Support vs. Individualized Feedback

I wrote about how individualized feedback, especially when required, is largely a waste of time. Don’t confuse providing additional input to a student with giving an individualized feedback for its own sake, about something that student completed (but doesn’t need any reply), or worse, on correct/incorrect responses. That kind of individualized feedback isn’t worth our time, and not even effective, pedagogically. When any reflection Q responses indicate comprehension, we don’t need comprehension Qs.

In fact, rather than spending time any time at all writing comprehension Qs, use data from the reflection Qs and spend time writing more comprehensible texts! That is, inasmuch as comprehension Qs are a student’s word on homework (i.e. remote learning), so are the reflections. It’s much more valuable to get a sense of how often a student is referring (i.e. signs of incomprehension) rather than percentage of X correct out of Y. Students are also more likely to report how often they used the glossary more accurately, which itself is all the comprehension data we need.